Emotion AI or artificial emotional intelligence, is going to change the way we connect and communicate with our devices?

By MYBRANDBOOK

Rana is co-founder and CEO of Affectiva says on how the category of Emotion AI, Rana is now pioneering Human Perception AI: technology that can understand all things human. A passionate advocate for innovation, ethics in AI and diversity, Rana has been recognized on Fortune’s 40 Under 40 and Forbes America’s Top 50 Women in Tech. Rana is a World Economic Forum Young Global Leader. She holds a PhD from the University of Cambridge and a Post Doctorate at MIT and speaking in the Guru session of the Dell Technology World in Las Vegas ,USA.

As a team member at MIT Media Lab spun off on a mission to humanize technology, being spent last 20 years of career on this mission, to build machines that can understand all things human and we leverage computer vision, field learning, deep learning, effectiveness, and to build the algorithms that can understand people's emotional states, cognitive states, and behaviors and interactions and apply that to the devices and technologies around us in a way that makes them more effective.

At the Media Lab and MIT, we have access to a lot of Fortune 500 companies and every time they visit the lab, they would express an interest in commercializing or buying or licensing the technology and so after, a few years of doing research at MIT, we realized that there was a huge potential commercial opportunity, which provided the impetus for us spinning out and starting as a team.

Speaking on emotion AI or artificial emotional intelligence, is it just going to change the way we connect and communicate with our devices? I believe it's fundamentally going to change the way we connect and communicate with people and as humans is ingrained in every aspect of our lives. We've seen some examples from Eric's presentation, and is starting to take on tasks that were traditionally done by humans, for example, acting as driving your car assisting with your, with your, your healthcare, even hiring, you're an ex co-worker.

We think about this problem between humans and machines, in terms of reciprocal trust. Unfortunately, there are a lot of examples, where the stress has already gone wrong. You know, that chatbot on Twitter that turned racist within 24 hours, self driving cars getting involved in fatal accidents. And perhaps the one that's most relevant to the work I do is around facial recognition. So there's been a lot of criticism of facial recognition technologies that discriminate against people, be better in their lives. It's not just about your cognitive intelligence, it's about your emotional intelligence. So people who have higher EQ tend to be more likable and more persuasive and they are successful in both in their professional and their personal lives. I also want you to think a little bit about your own lives, we know that we can't work or live alongside people we don't trust. And I argue that that's the same for our AI systems as well. She was speaking on how technology could identify emotions, just as humans can? Know?

What if your computer can tell the difference between a smile and a smart? They both involve over half of the face, but they have very different meanings? What if our online learning apps could detect the emotional engagement of the students and adapt the system accordingly?

Secondly, when you walk into the doctor's office today, they don't ask you what your temperature is, or what your blood pressure is, they just measure it. But in the world of mental health, the ground truth is still on a scale from one to 10. How much pain are you in? how depressed are you? How suicidal are you? And it's very subjective data. It's not objective at all. And we can do better. She says, as of how we communicate and the choice of words were using 93% is nonverbal and about half of that is your facial expressions and your gestures and half. The other half is the vocal intonation, how fast are you speaking on how much energy is there in your voice, and that's fine.

Some emotions has been around for over 200 years of focus on the face. Because that's where we got our start. This guy called to Shenzhen, we kind of mapped out all of the facial muscles that we have, like electrically stimulating people's facial muscles. We do not do that anymore, thankfully.

Lastly, Rana says, she and her team uses computer vision and deep learning and machine learning and tons of tons of data to automate that process. Essentially, what we do is we have our own data imitators sometimes leverage data becomes training data for our algorithms, and a subset becomes validation data. And we do that over and over again, we continue to amass a ton of data to increase the repertoire of the system.

Legal Battle Over IT Act Intensifies Amid Musk’s India Plans

The outcome of the legal dispute between X Corp and the Indian government c...

Wipro inks 10-year deal with Phoenix Group's ReAssure UK worth

The agreement, executed through Wipro and its 100% subsidiary,...

Centre announces that DPDP Rules nearing Finalisation by April

The government seeks to refine the rules for robust data protection, ensuri...

Home Ministry cracks down on PoS agents in digital arrest scam

Digital arrest scams are a growing cybercrime where victims are coerced or ...

Icons Of India : Anil Kumar Lahoti

Anil Kumar Lahoti, Chairman, Telecom Regulatory Authority of India (TR...

Icons Of India : Arjun Malhotra

Arjun Malhotra, the Chairman of Magic Software Inc., is widely recogni...

Icons Of India : Arundhati Bhattacharya

Arundhati Bhattacharya serves as the Chairperson and CEO of Salesforce...

UIDAI - Unique Identification Authority of India

UIDAI and the Aadhaar system represent a significant milestone in Indi...

ITI - ITI Limited

ITI Limited is a leading provider of telecommunications equipment, sol...

GSTN - Goods and Services Tax Network

GSTN provides shared IT infrastructure and service to both central and...

Indian Tech Talent Excelling The Tech World - REVATHI ADVAITHI, CEO- Flex

Revathi Advaithi, the CEO of Flex, is a dynamic leader driving growth ...

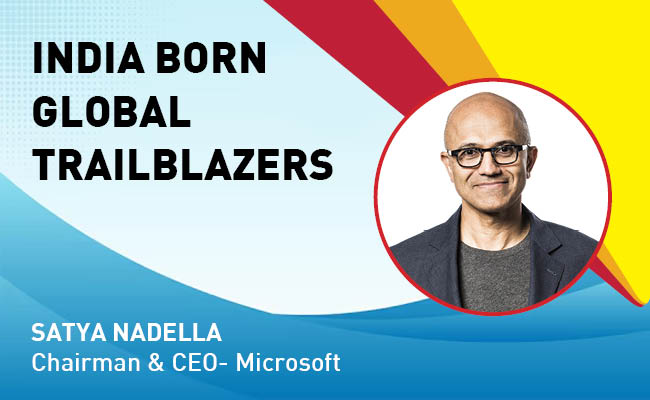

Indian Tech Talent Excelling The Tech World - Satya Nadella, Chairman & CEO- Microsoft

Satya Nadella, the Chairman and CEO of Microsoft, recently emphasized ...

Indian Tech Talent Excelling The Tech World - Steve Sanghi, Executive Chair, Microchip

Steve Sanghi, the Executive Chair of Microchip Technology, has been a ...

of images belongs to the respective copyright holders

of images belongs to the respective copyright holders