Artificial intelligence can now detect human emotion better than people can ...!!!

By MYBRANDBOOK

We all know what is emotion and how critically it influence all aspects of our lives, from where and how we live, work, learn and play, to the decisions we make, big and small. Not only it direct how we communicate and connect with each other, but also impact our health and well-being. The Human Intelligence is the ability to recognise our own emotions and as well as of other people and to enforce our guidance for behaviour,attitude and adapt different environmental changes to achieve our Goal or objectives.

Now a days we are in a well equipped digital world. We are surrounded by lots of hyper and volatilised connected systems, smart devices and advanced AI (artificial intelligence) systems. In other words, lots of IQ, but no EQ ( Emotional Quiescent). That’s a problem, especially as our interactions with technology are becoming more conversational and relational. Just look at how we use our smart devices and interact with intelligent agents such as Siri and Amazon’s Alexa. These technologies that are designed to interact with humans need emotional intelligence to be effective. Specifically, they need to be able to sense human emotions and then to adapt their operation accordingly.

What is an Emotion Artificial Intelligence ?

You might also know this as emotion recognition technology. The Emotion AI i.e the technology of machine learning, unobtrusively measures facial expressions of emotion. Using just a standard webcam, this technology first identifies a human face in real time or in an image or video. Computer vision algorithms identify key landmarks on the face – for example the corners of your eyebrows, the tip of your nose, the corners of your mouth. Machine learning algorithms then analyse pixels in those regions to classify facial expressions. Combinations of these facial expressions are then mapped to emotions. Now also using deep learning approaches, it can very quickly tune the algorithms for high performance and accuracy. Emotion AI uses massive amounts of data. That is really important because people around the world don’t look like the same, and certainly express emotion differently when they go about their daily business "in the a wild Mode".

So how is Emotion AI being used ?

Now a days over 1,400 brands are using this technology to measure and analyse how consumers respond to digital content, such as videos and ads, and even TV shows. Emotion data helps media companies, brands and advertisers improve their advertising. Emotion AI also gets integrated into other technologies to make them emotion-aware. Now with this type of evolved Software Developer Kit (SDK), any developer can embed Emotion AI into the apps, games, devices and digital experiences they are building, so that these can sense human emotion and adaption. This approach is rapidly driving more ubiquitous use of Emotion AI across a number of different industries.

Robots like Mabu and Tega are using Emotion AI to understand the moods and expressions of the people they interact with. In education, Emotion AI will understand if a student is frustrated or bored, but what if the learning content would adapt ? The Little Dragon learning app is among the first of such adaptive apps designed to help children learn language in a more interactive and interesting way. Video games are designed to take us on an emotional journey but do not change their gameplay based on the emotions of the player. The Nevermind game changes that all around – this bio-feedback thriller game gets more bizarre and challenging as players show signs of distress. In healthcare the impact of Emotion AI can perhaps be the most significant, from drug efficacy testing and telemedicine, to research in depression, suicide prevention and autism. The team at Brain Power has built an autism program that is already changing the lives of families with children on the autism spectrum. There are many more examples in automotive, retail and even the legal industry where emotion recognition technology is in use.

Evidently, it is possible to detect if someone is telling you a bald-faced lie, all by looking at how much blood is coursing through their face. In order to test this theory, the scientists created an AI that could measure the color of certain parts of a person's face in order to guess at their emotions. The robot was given pictures of a person experiencing different emotions, and at the same time without having any training on understanding expressions, the AI was forced to make assumptions based completely on color. As the photos showed people with blank expressions, it wasn't possible for humans to determine their emotions based on whether or not they were smiling, and so everyone involved had to use facial hue alone.

The test was a success and the AI was as a matter of fact, more capable of detecting emotion correctly than humans who were using years of experience at reading people's faces. The artificial intelligence managed to guess happy faces correctly 90 percent of the time, sad faces 75 percent of the time, and angry faces 70 percent of the time. This compares with the human score of guessing happy faces 70 percent of the time, sad faces 75 percent of the time, and angry faces 65 percent of the time. Not only does this prove the competence of artificial intelligence, but it also makes a strong case for the possibility that we can read people's faces based on their color.

The big Theory : "The emotion information transmitted by color is at least partially independent from that by facial movement."

Still then trere are some limites :

Can you orchestrate the vertices for required AI scope ?

Emotion AI is the idea that devices should sense and adapt to emotions like humans do. This can be done in a variety of ways—understanding changes in facial expressions, gestures, physiology, and speech. Our relationship with technology is changing, as it’s becoming a lot more conversational and relational. If we are trying to build technology to communicate with people, that technology should have emotional intelligence (EQ). This manifests in a broad range of applications: from Siri on your phone to social robots, even applications in your car.

Is it possible to analyse the emotion and interact with different Languages Processing?

Social scientists who have studied how people portray emotions in conversation found that only 7-10% of the emotional meaning of a message is conveyed through the words. We can mine Twitter, for example, on text sentiment, but that only gets us so far. About 35-40% is conveyed in tone of voice-how you say something-and the remaining 50-60% is read through facial expressions and gestures you make. Technology that reads your emotional state, for example by combining facial and voice expressions, represents the emotion AI space. They are the subconscious, natural way we communicate emotion, which is nonverbal and which complements our language. What we say is also very cognitive-we have to think about what we are going to say. Facial expressions and speech actually deal more with the subconscious, and are more unbiased and unfiltered expressions of emotion.

Where can you get the Training data or Techniques for machine to distinguish emotion ?

At Affectiva, we use a variety of computer vision and machine learning approaches, including deep learning. Our technology, like many computer vision approaches, relies on machine learning techniques in which algorithms learn from examples (training data). Rather than encoding specific rules that depict when a person is making a specific expression, we instead focus our attention on building intelligent algorithms that can be trained to recognize expressions.

And finally is it possible to understand the Brain-storms related to micro emotional feelings like madness or psychological disorder state, may be artificially transplanted..... ???

Legal Battle Over IT Act Intensifies Amid Musk’s India Plans

The outcome of the legal dispute between X Corp and the Indian government c...

Wipro inks 10-year deal with Phoenix Group's ReAssure UK worth

The agreement, executed through Wipro and its 100% subsidiary,...

Centre announces that DPDP Rules nearing Finalisation by April

The government seeks to refine the rules for robust data protection, ensuri...

Home Ministry cracks down on PoS agents in digital arrest scam

Digital arrest scams are a growing cybercrime where victims are coerced or ...

ICONS OF INDIA : SANJAY GUPTA

Sanjay Gupta is the Country Head and Vice President of Google India an...

Icons Of India : ASHISH KUMAR CHAUHAN

Ashish kumar Chauhan, an Indian business executive and administrator, ...

Icons Of India : Dilip Asbe

At present, Dilip Asbe is heading National Payments Corporation of Ind...

UIDAI - Unique Identification Authority of India

UIDAI and the Aadhaar system represent a significant milestone in Indi...

IOCL - Indian Oil Corporation Ltd.

IOCL is India’s largest oil refining and marketing company ...

IREDA - Indian Renewable Energy Development Agency Limited

IREDA is a specialized financial institution in India that facilitates...

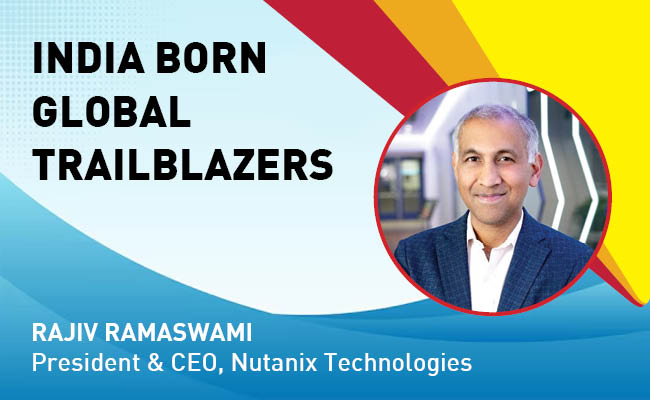

Indian Tech Talent Excelling The Tech World - Rajiv Ramaswami, President & CEO, Nutanix Technologies

Rajiv Ramaswami, President and CEO of Nutanix, brings over 30 years of...

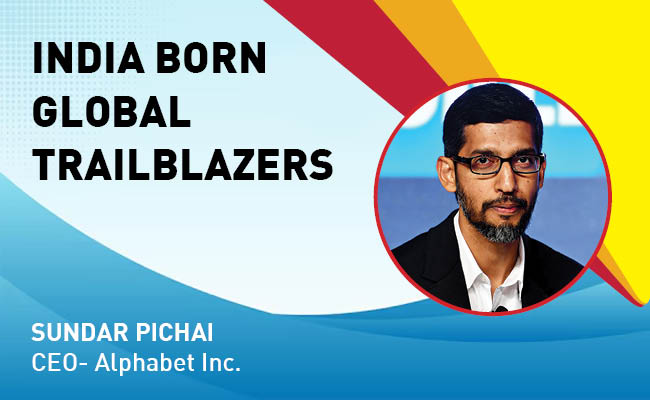

Indian Tech Talent Excelling The Tech World - Sundar Pichai, CEO- Alphabet Inc.

Sundar Pichai, the CEO of Google and its parent company Alphabet Inc.,...

Indian Tech Talent Excelling The Tech World - AJAY BANGA, President - World Bank

Ajay Banga is an Indian-born American business executive who currently...

of images belongs to the respective copyright holders

of images belongs to the respective copyright holders